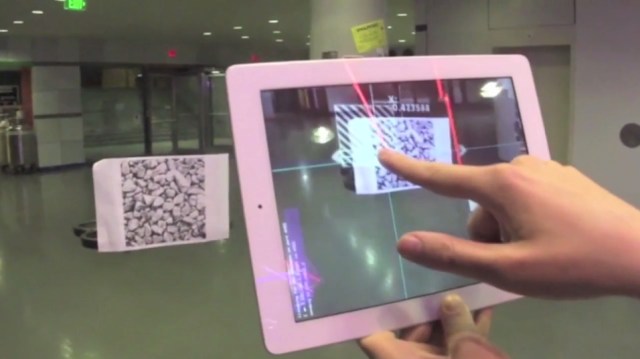

Created at the Tangible Media Group at the MIT in collaboration with Sony Corporation, exTouch creates Spatially-Aware Embodied Manipulation of Actuated Objects Mediated by Augmented Reality._ _In other words exTouch is an interface system that allows you to manipulate actuated objects in space using augmented reality. The “exTouch” system extends the users touchscreen interactions into the real world by enabling spatial control over the actuated object. When users touch a device shown in live video on the screen, they can change its position and orientation through multi-touch gestures or by physically moving the screen in relation to the controlled object.

The team demonstrates the system used for applications such as an omnidirectional vehicle, a drone, and moving furniture for reconfigurable room. They envision that proposed spatially-aware interaction provide further enhancement of human physical ability through spatial extension of user interaction. Client mobile application running on an iPad was built using OpenFrameworks. Omnidirectional vehicle was build with Arduino and the system uses WiFI for communication between the client and the device.

This project was introduced at the demo and presentation at TEI2013.

Here’s a video depicting the same.

_Created by Shunichi Kasahara, Ryuma Niiyama, Valentin Heun and Hiroshi Ishii. : _Project Page